INSTALLING THE NVIDIA VIRTUAL GPU MANAGER FOR VSPHERE

The NVIDIA Virtual GPU Manager runs on ESXi host. It is provided as a VIB file, which must be copied to the ESXi host and then installed.

Package installation

To install the vGPU Manager VIB you need to access the ESXi host via the ESXi Shell or SSH. Refer to VMware’s documentation on how to enable ESXi Shell or SSH for an ESXi host.

Note: Before proceeding with the vGPU Manager installation make sure that all VM’s are powered off and the ESXi host is placed in maintenance mode. Refer to VMware’s documentation on how to place an ESXi host in maintenance mode.

Use the esxcli command to install the vGPU Manager package:

[root@esxi:~] esxcli software vib install -v /NVIDIA-vgx- VMware_ESXi_6.0_Host_Driver_346.42-1OEM.600.0.0.2159203.vib Installation Result |

Message: Operation finished successfully. Reboot Required: false VIBs Installed: NVIDIA_bootbank_NVIDIA-vgx- VMware_ESXi_6.0_Host_Driver_346.42-1OEM.600.0.0.2159203 VIBs Removed: VIBs Skipped: |

Reboot the ESXi host and remove it from maintenance mode.

Verifying installation

After the ESXi host has rebooted, verify that the GRID package installed and loaded correctly by checking for the NVIDIA kernel driver in the list of kernel loaded modules.

[root@esxi:~] vmkload_mod -l | grep nvidia nvidia 5 8420

If the nvidia driver is not listed in the output, check dmesg for any load-time errors reported by the driver.

Verify that the NVIDIA kernel driver can successfully communicate with the GRID physical GPUs in your system by running the nvidia-smi command, which should produce a listing of the GPUs in your platform:

[root@esxi:~] nvidia-smi Tue Mar 10 17:56:22 2015 +------------------------------------------------------+ | NVIDIA-SMI 346.42 Driver Version: 346.42 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | |===============================+======================+======================| | 0 GRID K2 On | 0000:04:00.0 Off | Off | | N/A 27C P8 27W / 117W | 11MiB / 4095MiB | 0% Default | +-------------------------------+----------------------+----------------------+ | 1 GRID K2 On | 0000:05:00.0 Off | Off | | N/A 27C P8 27W / 117W | 10MiB / 4095MiB | 0% Default | +-------------------------------+----------------------+----------------------+ | 2 GRID K2 On | 0000:08:00.0 Off | Off | | N/A 32C P8 27W / 117W | 10MiB / 4095MiB | 0% Default | +-------------------------------+----------------------+----------------------+ | 3 GRID K2 On | 0000:09:00.0 Off | Off | | N/A 32C P8 27W / 117W | 10MiB / 4095MiB | 0% Default | +-------------------------------+----------------------+----------------------+ | 4 GRID K2 On | 0000:86:00.0 Off | Off | | N/A 24C P8 27W / 117W | 10MiB / 4095MiB | 0% Default | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ |

||

| Processes: GPU Memory | | GPU PID Type Process name Usage | |=============================================================================| | No running processes found | +-----------------------------------------------------------------------------+ If nvidia-smi fails to report the expected output, check dmesg for NVIDIA kernel driver messages.

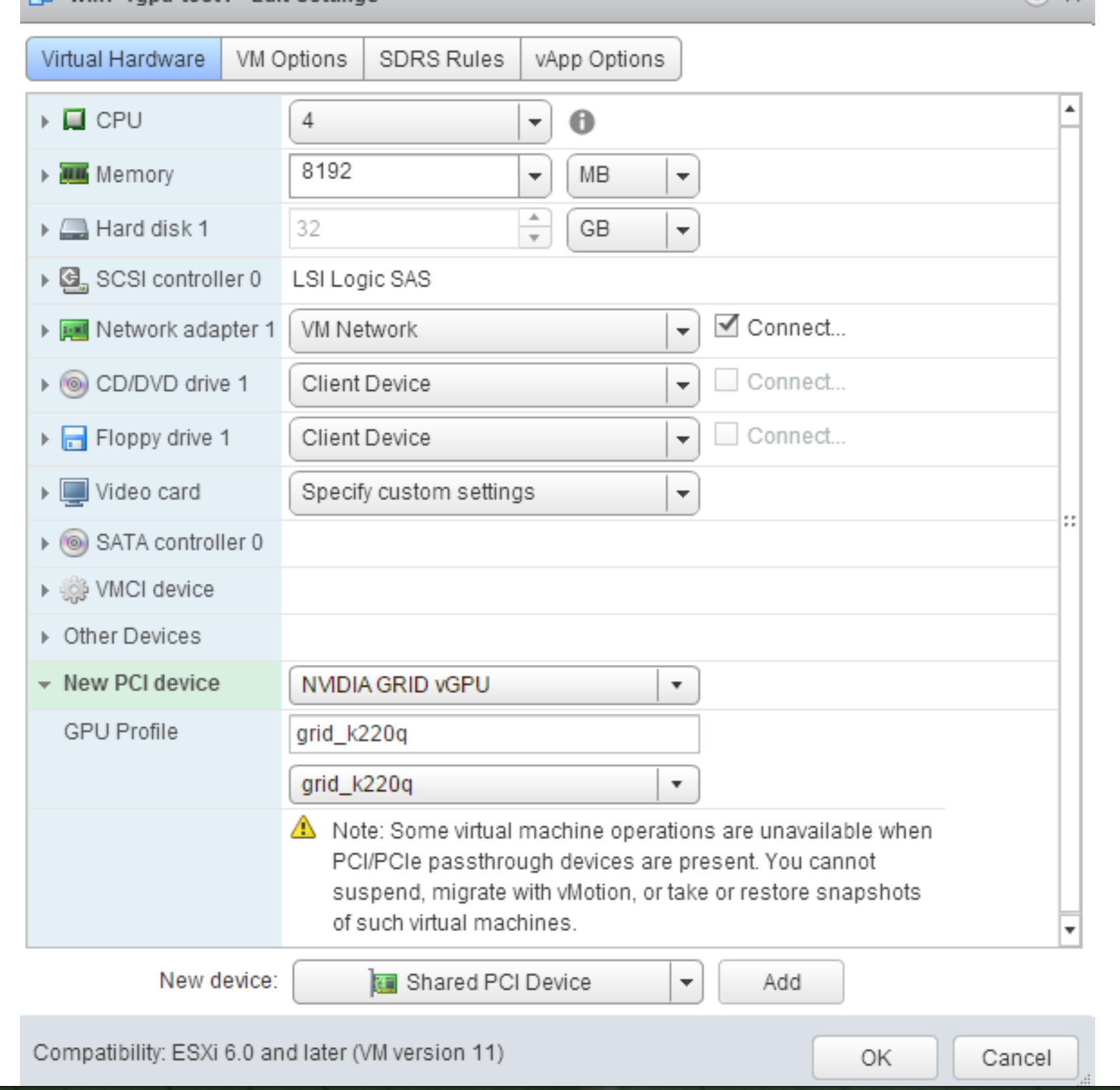

CONFIGURING A VM WITH VIRTUAL GPUNote: VMware vSphere does not support VM console in vSphere Web Client for VMs configured with vGPU. Make sure that you have installed an alternate means of accessing the VM (such as VMware Horizon or a VNC server) before you configure vGPU. VM console in vSphere Web Client should become active again once the vGPU parameters are removed from the VM’s configuration. To configure vGPU for a VM:

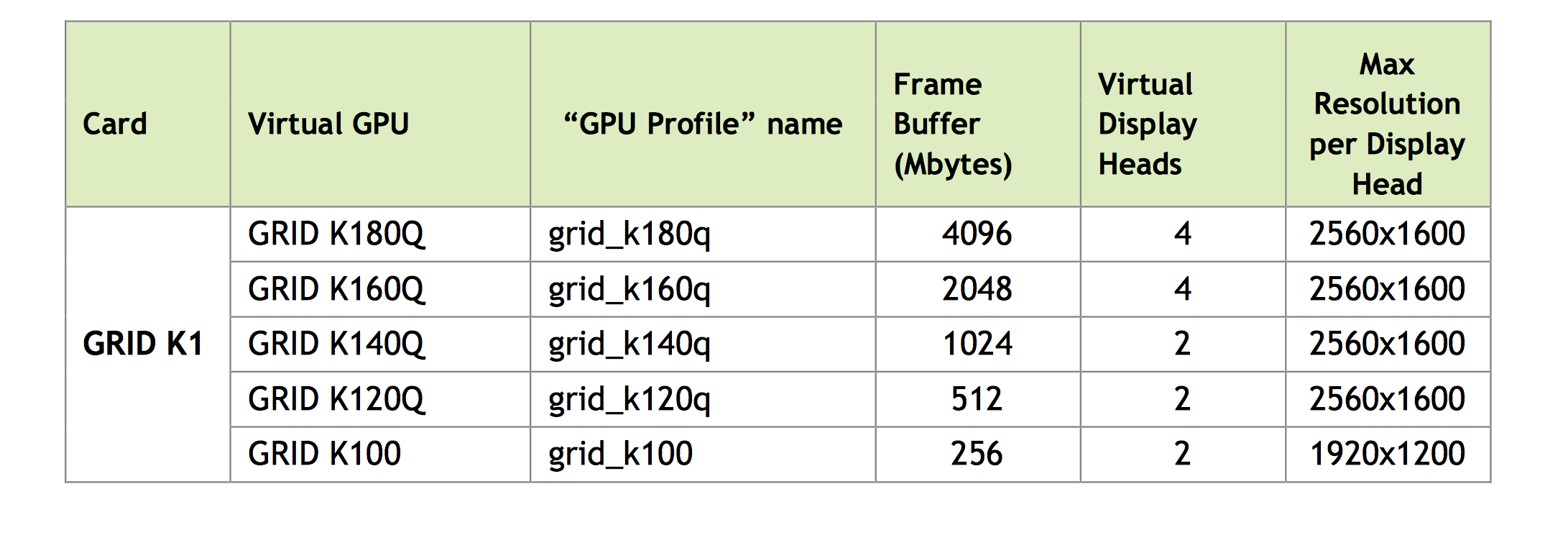

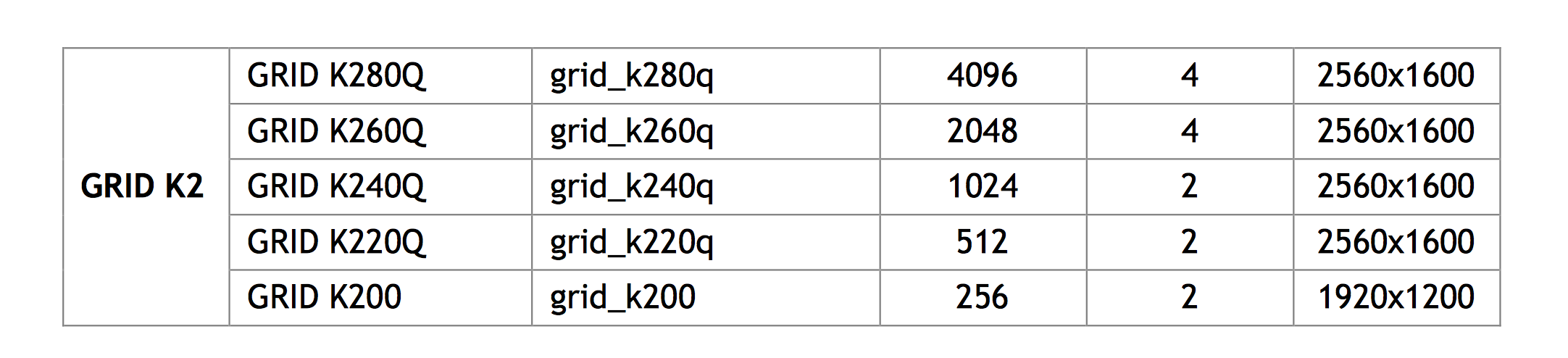

This should auto-populate NVIDIA GRID vGPU in the PCI device field, . In the GPU Profile dropdown menu, select the type of vGPU you wish to configure. The supported vGPU types are listed in Table 1. |

BOOTING THE VM AND INSTALLING DRIVERS

Once you have configured a VM with a vGPU, start the VM. VM console in vSphere Web Client is not supported in this vGPU release, use VMware Horizon or VNC to access the VM’s desktop.

The VM should boot to a standard Windows desktop in VGA mode at 800×600 resolution. The Windows screen resolution control panel may be used to increase the resolution to other standard resolutions, but to fully enable vGPU operation, as for a physical NVIDIA GPU, the NVIDIA driver must be installed.

- Copy the 32- or 64-bit NVIDIA Windows driver package to the guest VM and execute it to unpack and run the driver installer.

- Click through the license agreement

- Select Express Installation

- Once driver installation completes, the installer may prompt you to restart the platform. Select Restart Now to reboot the VM, or exit the installer and reboot the VM when ready.

Once the VM restarts, it will boot to a Windows desktop. Verify that the NVIDIA driver is running by right-clicking on the desktop. The NVIDIA Control Panel will be listed in the menu; select it to open the control panel. Selecting “System Information” in the NVIDIA control panel will report the Virtual GPU that the VM is using, its capabilities, and the NVIDIA driver version that is loaded.